Muhammad Adeel Nisar1, Kimiaki Shirahama2, Frederic Li1, Xinyu Huang1, Marcin Grzegorzek1

1 Institute of Medical Informatics, University of Lübeck, Lübeck, Germany

2 Department of Informatics, Kindai University, Japan

Note (last updated on April 18, 2023): This webpage is a copy of the one linked in the paper, which was originally located on the website of Kindai University (Japan). Because of the movement of the second author who is managing this webpage, the original webpage will no longer be accessible shortly. Consequently, all research materials used in the paper have been moved to the current institution of the second author Doshisha University (Japan). The contents contained and linked in this webpage are exactly the same as the original ones.

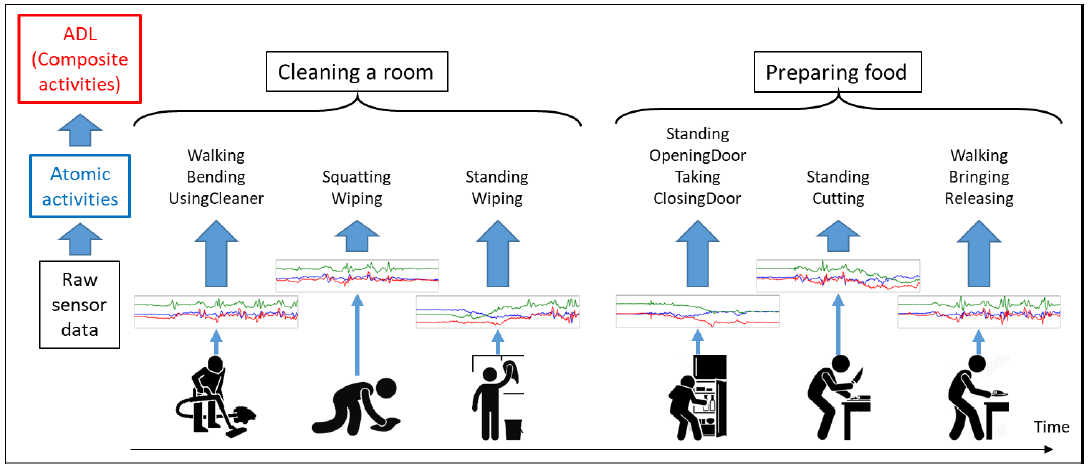

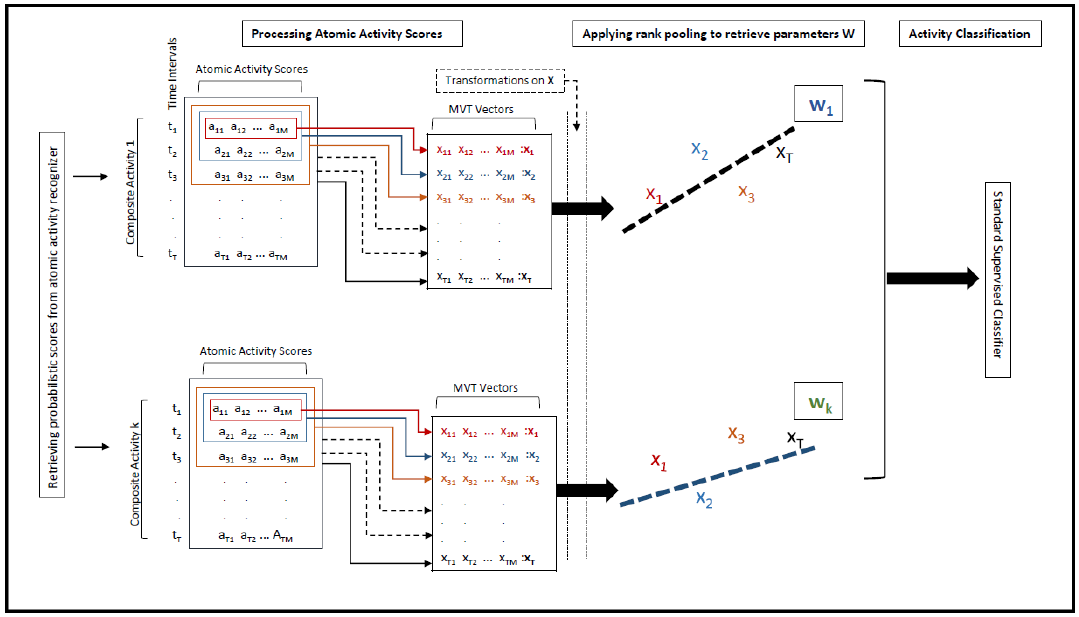

We use wearable devices to recognize activities of daily living (ADLs) which are composed of several repetitive and concurrent short movements that have temporal dependencies. It is improbable to directly use sensor data to recognize these long term (or composite) activities because two executions of same ADL will result into largely diverse sensory data, however, they may be similar in terms of more semantic and meaningful underlying short term actions (or atomic activities). Therefore, we propose a two-level hierarchical model for the recognition of ADLs. Firstly, atomic activities are detected and their probabilistic scores are generated at the lower level. Secondly, we need to deal with the temporal transitions of the atomic activities so we use a temporal pooling method, rank pooling. Rank pooling enables us to encode the ordering of atomic activities by using their scores, at the higher level of our model. Rank pooling is performed by learning a function via ranking machines. The parameters of these ranking machines are used as features to characterize composite activities. Classifiers trained on such features effectively recognize composite activities and produce 5-13 percent of improvement in results as compared to the other popularly used techniques. We also produce a large data-set of 61 atomic and 7 composite activities for our experiments.

This paper offers two main contributions:

The atomic activities are detected by the codebook approach Codebook approach [1] which outputs the probabilistic scores of each of the atomic activity and rank pooling is used to construct feature vectors for the composite activities by using these probabilistic scores. Classifiers trained on feature vectors obtained from rank pooling turn out to be very effective for distinguishing composite activities. We evaluate our results obtained by rank pooling while comparing them with the other basic pooling techniques like average and max pooling and also with HMM and LSTM pooling. We found that the rank pooling is performing 5 to 13% better in accuracy than the ones produced by other pooling techniques.

Please see here for an extension of the codebook approach in [1].

In our experiments, we use Cognitive Village dataset. We collected data for the daily life activities by using unobtrusive wearable devices like smart phone, smart watches and smart glasses. The details of these three wearable devices and the embedded-sensors are described below:

We use eight sensor modalities provided by these wearable devices as mentioned in the above listings. The sensors data from JINS-glasses and Huawei-watch are initially sent to the LG-G5 smart phone via Bluetooth connection and then all sensors data are further sent to our home-gateway through Rabbit-MQ by using Wi-Fi connection, where our atomic and composite activity recognition methods are executed.

We have collected data for two kinds of activities, i.e. atomic and composite activities.

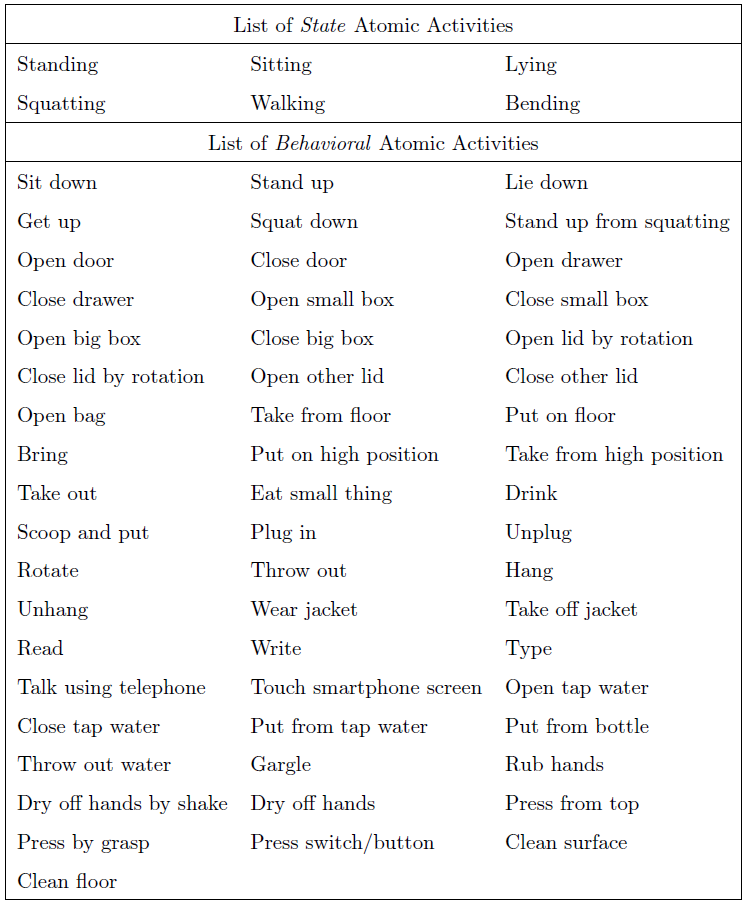

Atomic Activities Dataset: The data acquisition process targets 61 different atomic activities involving 8 subjects who contributed to collect over 9700 samples. The data collection was carried out separately for training and testing phases on different days because we intended to include variations while performing the activities. In each phase the subjects were asked to wear the three devices and perform 10 executions of each activity where every execution lasts for 5 seconds. After the removal of executions where data were not appropriately recorded due to sensor errors, we use 9029 instances for our experiments. The atomic activities are split into two distinct categories: 6 state activities characterizing the posture of a subject, and 55 behavioral activities characterizing his or her behavior. The complete list of activities is provided in the table below. It can be noted that a behavioral activity can be performed while being in a particular state, e.g. rubbing hands can be performed while either sitting, standing or lying.

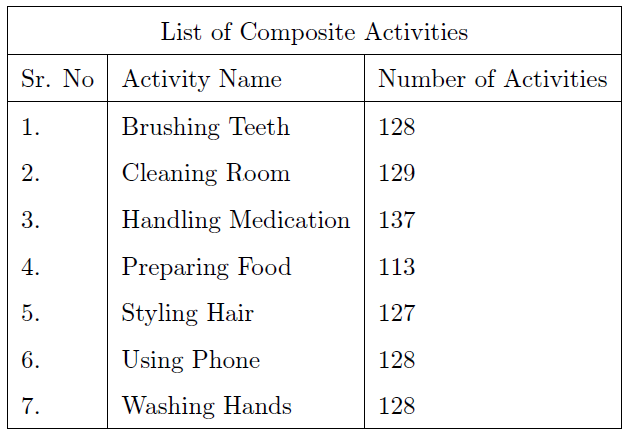

Composite activities Dataset: The data acquisition process for 7 composite activities was performed by six subjects using the same three wearable devices. An Android-based data acquisition application was developed to collect data for composite activities. The application connected the smart watch and the smart glasses to smart phone via Bluetooth and saved the data of 8 sensory modalities locally on the smart phone's memory. In this way, it became convenient for the subjects to move with the set of devices to their kitchens, washrooms or living rooms, and perform the activities naturally. Like the data collection process of atomic activities, the data for composite activities was also collected separately for training and testing phases on different days. We collected over 1000 instances of composite activities however the experiments were performed on 890 instances because some of the instances had missing sensory data and were removed from the dataset later. The length of each activity is not fixed as analogous to real life events and it varies from 5 minutes to 30 seconds because some composite activities, like preparing food take long time to be completed and on the other hand there are some short-term composite activities for example handling medications.

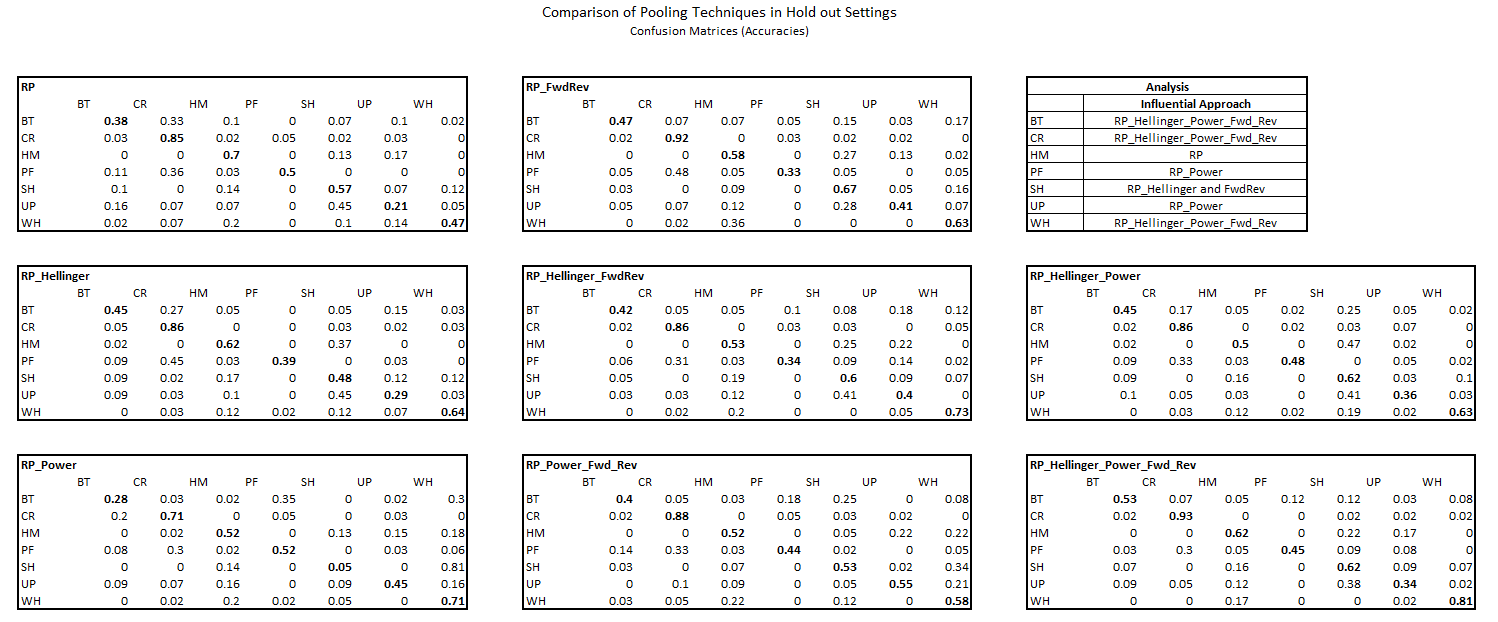

The following table shows the confusion matrices (accuracies) related to the results of Table 3 (in the paper).

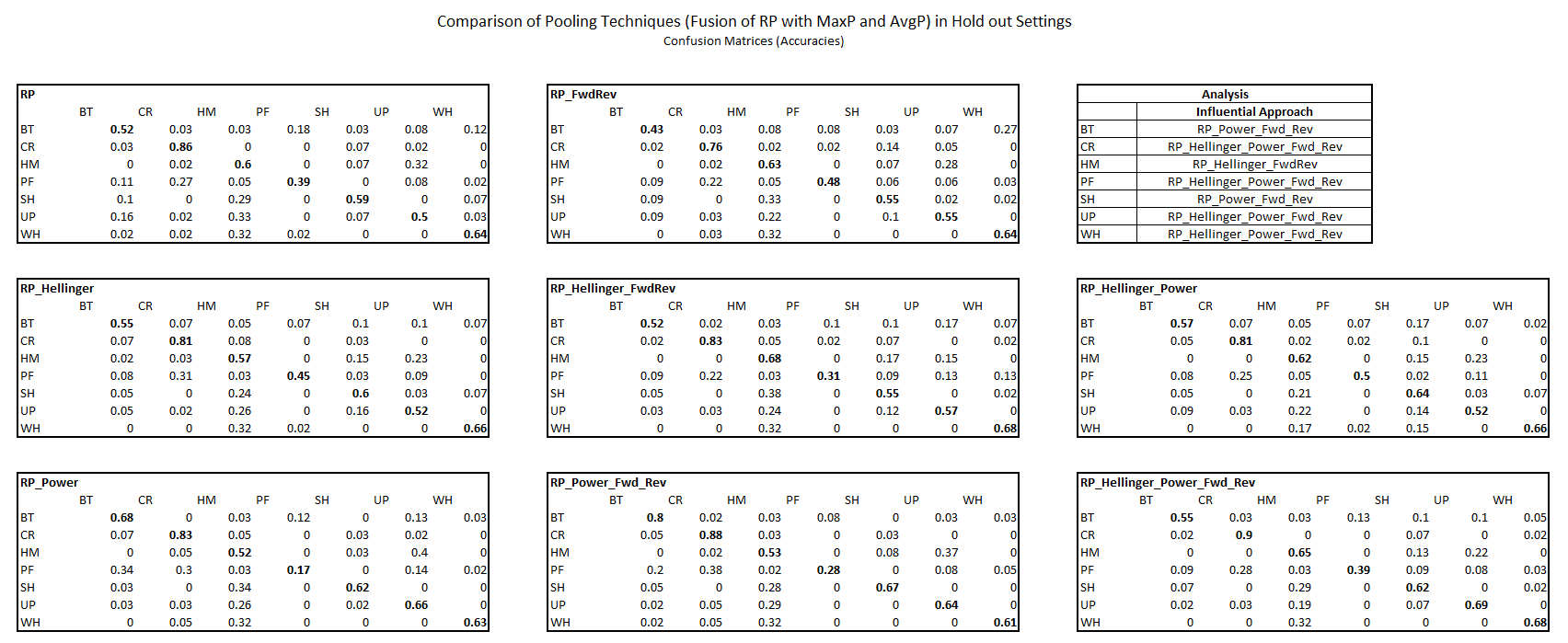

The following table shows the confusion matrices (accuracies) related to the results of Table 5 in the paper.

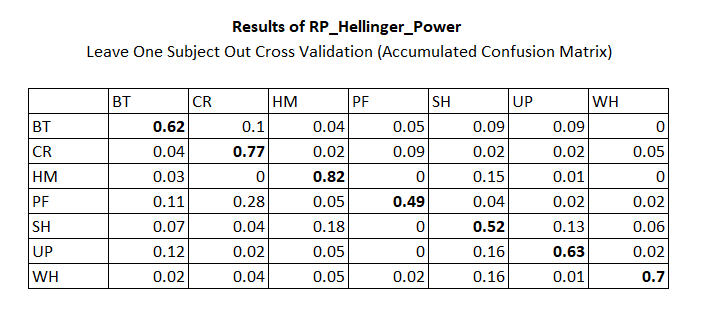

The following table shows the accumulated confusion matrix (accuracies) related to the results of Table 4 in the paper.

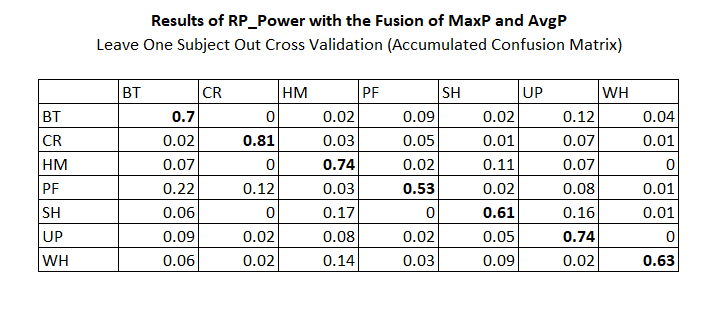

The following table shows the accumulated confusion matrix (accuracies) related to the results of Table 6 in the paper.

Discussion: The above table shows the classification accuracies in the form of confusion matrices for different Rank Pooling (RP) variations. Table C shows confusion matrices related to the comparison of different pooling techniques in our paper. It is clearly visible that the Hellinger and Power non-linear kernels increase the accuracies in majority of the cases. For example, in the case of BrushingTeeth (BT), the basic version of RP produces only 38% accuracy as compare to RP_Hellinger_Power_FwdRev which classifies BT with the accuracy of 53%. A similar observation holds for UsingPhone (UP) where basic RP produces only 21% accuracy whereas RP_Power_Fwd_Rev generates 55% accuracy for UP activities. We have observed that some of the composite activities have very distinctive underline atomic activities. These activities are recognized with very high accuracies by majority of the RP variations. For example CleaningRoom is accurately classified with basic RP as well as with the non-linear approaches of RP. On the other hand the large misclassification has been identified in PreparingFood (PF) activity. A closer look of Table C reveals that PF is mainly confused with CR. It is perhaps due to the involvement of cleaning kitchen slab, picking and placing cooking tools etc during the PF activity. As we collected data in daily life settings so it is highly probable that afore-mentioned CR related activities were confused with PF.

Table D shows the results with the involvement of fusion of RP with max and average pooling. It is clearly visible that the fusion of multiple pooling techniques enhanced the accuracies of the composite activities with the corresponding RP variations. For example the basic RP with fusion (Table D) produced improved result as compared to its non-fused (Table C) version for most of the activities.

Table E and F show the accumulated results in case of leave-one-subject-out cross validation settings. RP_Hellinger_Power and RP_Power produced best results as compared to the other RP variations in their respective data settings. Especially, RP_Power (Table F) produced the best results in our experimental setup. In our discussion above, we identified that CR exhibits the more distinctive whereas PF has more complex structure as compared to other activities. It was difficult to recognize PF by most of the RP variations shown in Table C and D. However, Table F shows that RP_Power classifies both activities along with other activities with very good accuracies especially for PF it generates best results.

An overview of the Tables C to F reveals that different RP techniques have been influential for recognition of different composite activities. This leads us to work on an algorithm-selection technique based upon the type of the activity as a future work.

Reference

[1] On the Generality of Codebook Approach for Sensor-based Human Activity Recognition, K. Shirahama, M. Grzegorzek, Electronics, Vol.6, No. 2, Article No. 44, 2017

All research materials used for the studies are provided at the following repository:

For any question about the paper, study or code, please contact the main author of the publication.

Please reference the following publication [2] if you use any of the codes and/or datasets linked above:

[2] Muhammad Adeel Nisar, Kimiaki Shirahama, Frédéric Li, Xinyu Huang, Marcin Grzegorzek, Rank Pooling Approach for Wearable Sensor-Based ADLs Recognition, Sensors, Vol. 20, No. 12, Article No. 3463, 2020 (paper (open access))