Frédéric Li1, Kimiaki Shirahama2, Muhammad Adeel Nisar1, Xinyu Huang1, Marcin Grzegorzek1

1 Institute of Medical Informatics, University of Lübeck, Germany

2 Department of Informatics, Kindai University, Japan

Note (last updated on April 18, 2023): This webpage is a copy of the one linked in the paper, which was originally located on the website of Kindai University (Japan). Because of the movement of the second author who is managing this webpage, the original webpage will no longer be accessible shortly. Consequently, all research materials used in the paper have been moved to the current institution of the second author Doshisha University (Japan). The contents contained and linked in this webpage are exactly the same as the original ones.

Deep Neural Networks (DNNs) have established themselves as one of the main state-of-the-art models for most pattern recognition problems such as classification and regression. In addition of the notable success they obtained in image processing problems such as image classification, image retrieval or object detection, DNNs have proven to be able to provide state-of-the-art performances in problems involving the use of time-series data.

Properly training a DNN can however prove to be difficult in practice. The typically high number of parameters of a DNN usually make the use of a large training set necessary in order to avoid problems related to overfitting. The overall scarcity of time-series data and the lack of large-scale publicly available labeled time-series datasets can make this particular issue quite challenging.

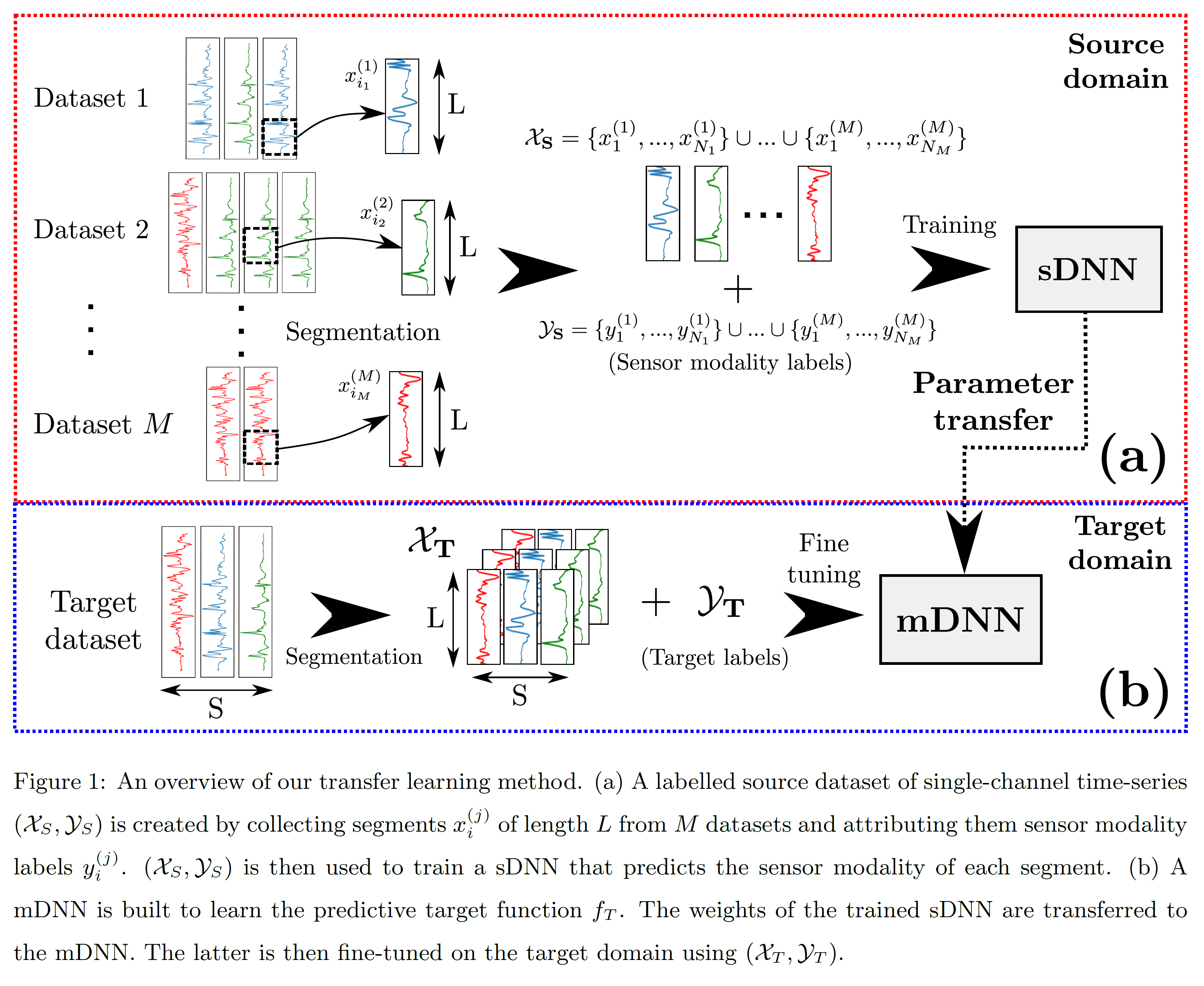

Transfer learning is an approach which can alleviate such data requirements. It consist in extracting knowledge from a source domain and using it to improve the learning of a model on a target domain (not necessarily related to the source domain). For DNNs, transfer learning consists in a transfer of weights and biases learned on the source domain to initialize another model then fine-tuned on the target domain. Many past works have shown transfer learning to lead to an improvement in performances for image processing problems, to the point that re-using already trained models such as AlexNet, VGG Net, ResNet, etc. for more specific applications has become standard. For time-series processing however, the studies regarding this topic are much more scarce, and the general effectiveness of transfer learning not proven yet.

Based on the observation that the best transfer performances for image processing problems were obtained after discriminatively training in a supervised way a DNN on a source domain as large as possible (ImageNet), we propose a similar approach for time-series transfer learning in this paper. We train a single-channel DNN as a sensor modality classifier - using easily available sensor-modality labels - on a source domain composed of four time-series datasets as diversified as possible, taken from the UCI machine learning repository:

The performances of our transfer approach were tested for two application domains of wearable-based computing: Human-Activity Recognition (HAR) and Emotion Recognition (ER). Two datasets were respectively used as target domains in our studies:

The results reported in our paper show that our technique can obtain better performances than not using any transfer (Glorot initialization of weights) on both target datasets. This result holds even for very low quantity of training data. Future works will focus on checking the generalization capacity of the method by testing different parameters on the source and target domains.

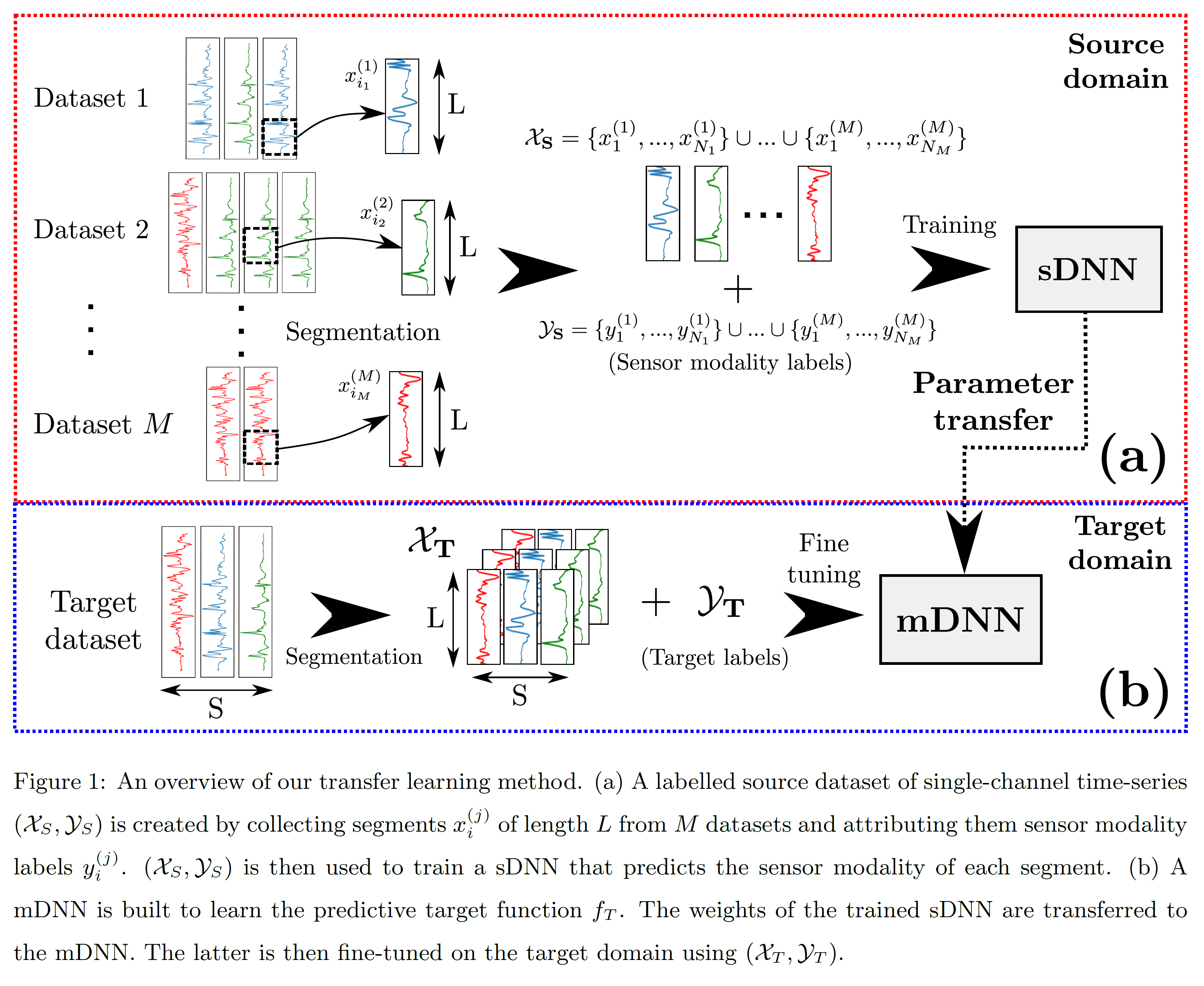

The CogAge provides a basis for researchers who consider human activities as a sequence of simpler actions, also referred to as atomic activities. It aggregates the data from 4 subjects performing a total of 61 different atomic activities split into two distinct categories: 6 state activities characterizing the pose of a subject, and 55 behavioral activities characterizing his/her behaviour. It can be noted that a behavioral activity can be performed while being in a particular state (e.g. drinking can be performed either while sitting or standing). Because this potential overlap between state and behavioral activities could potentially prevent a proper definition of classes (e.g. drinking while sitting could either be classified as drinking or sitting), two distinct classification problems were considered, one considering exclusively the 6 state activities, the other only the 55 behavioral activities.

The data was acquired using 3 devices:

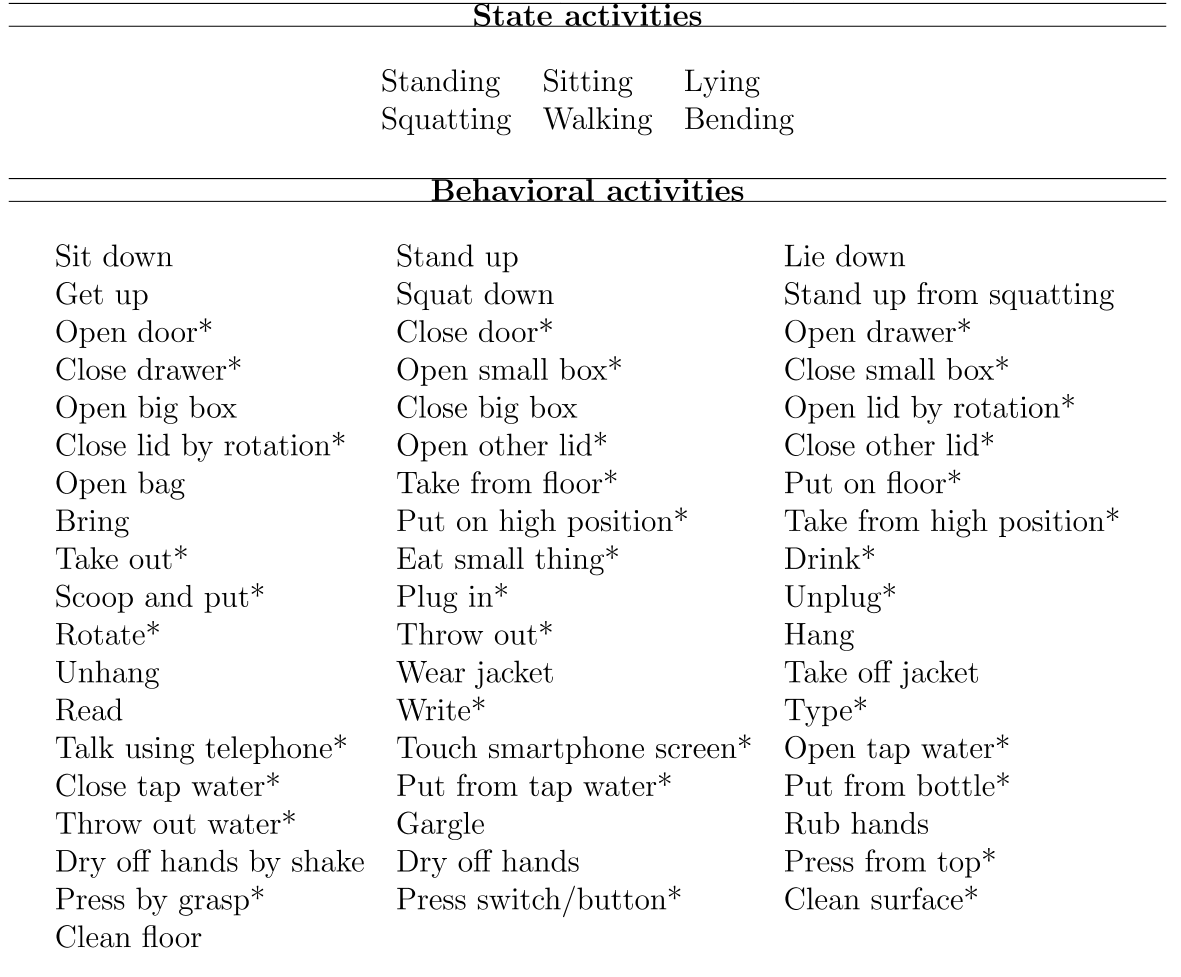

Each subject performed two data acquisition sessions. In each session, all 61 activities were performed at least 10 times, with each execution lasting for 5 seconds. For some behavioral activities, executions were performed with either the left or right hand when applicable.

Because of the fixed location of the smartwatch on the left arm of the subjects, the choice of the arm performing some of the behavioral atomic activities may be important and affect the classification difficulty. For this reason, the full dataset was split into 3 subsets: the state, Behavioral Left-Hand Only (BLHO) and Behavioral Both-Hands (BBH) datasets. They contain state activities, behavioral activities performed with the left hand, and behavioral activities performed by both hands indifferently, respectively.

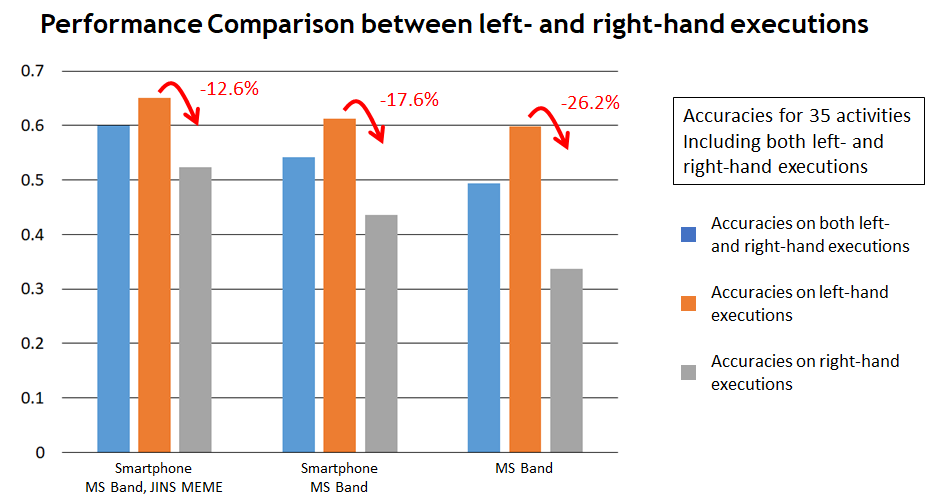

A baseline evaluation was performed for the classification of activities using a Codebook approach with hard-assignement [1] [2] for feature extraction with a SVM classifier. 35 behavioral activities which could be performed either with the left or right hand were classified in three different sensor setups: using all devices, using only smartphone and Microsoft Band, and using only the Microsoft Band. The study shows that a reasonable recognition rate of activities performed with the right hand only remains achievable, despite the device being placed on the left arm. Using more devices reduces the gap in performances between left and right hand executions.

The dataset is saved under the .data format. A pre-processed version of the dataset is also provided under the Python formats .pkl (dictionary) and .npy (arrays). The data are available at the following repository [link].

References

[1] On the Generality of Codebook Approach for Sensor-based Human Activity Recognition, K. Shirahama, M. Grzegorzek, Electronics, Vol.6, No. 2, Article No. 44, 2017

[2] Comparison of Feature Learning Methods for Human Activity Recognition using Wearable Sensors, F. Li, K. Shirahama, M. A. Nisar, L. Koeping, M. Grzegorzek, Sensors, Vol. 18, No. 2, Article No. 679, 2018

[IMPORTANT NOTE: because of data privacy reasons, we are not allowed to share the DEAP target data we used in our studies. In order to obtain access to the DEAP dataset, please go to the following webpage.]

The research materials used for the studies in the paper are provided here. This includes in particular:

For any question about the paper, study or code, please contact the main author of the publication, whose coordinates can be found here.

Please reference the following publication [1] if you use any of the codes and/or datasets linked above:

[1] Frédéric Li, Kimiaki Shirahama, Muhammad Adeel Nisar, Xinyu Huang, Marcin Grzegorzek, Deep Transfer Learning for Time-series Data Based on Sensor Modality Classification, Sensors, Vol. 20, No. 15, Article No. 4271, 2020 [Link to paper]